Paper-Conference

NeurIPS 2024.

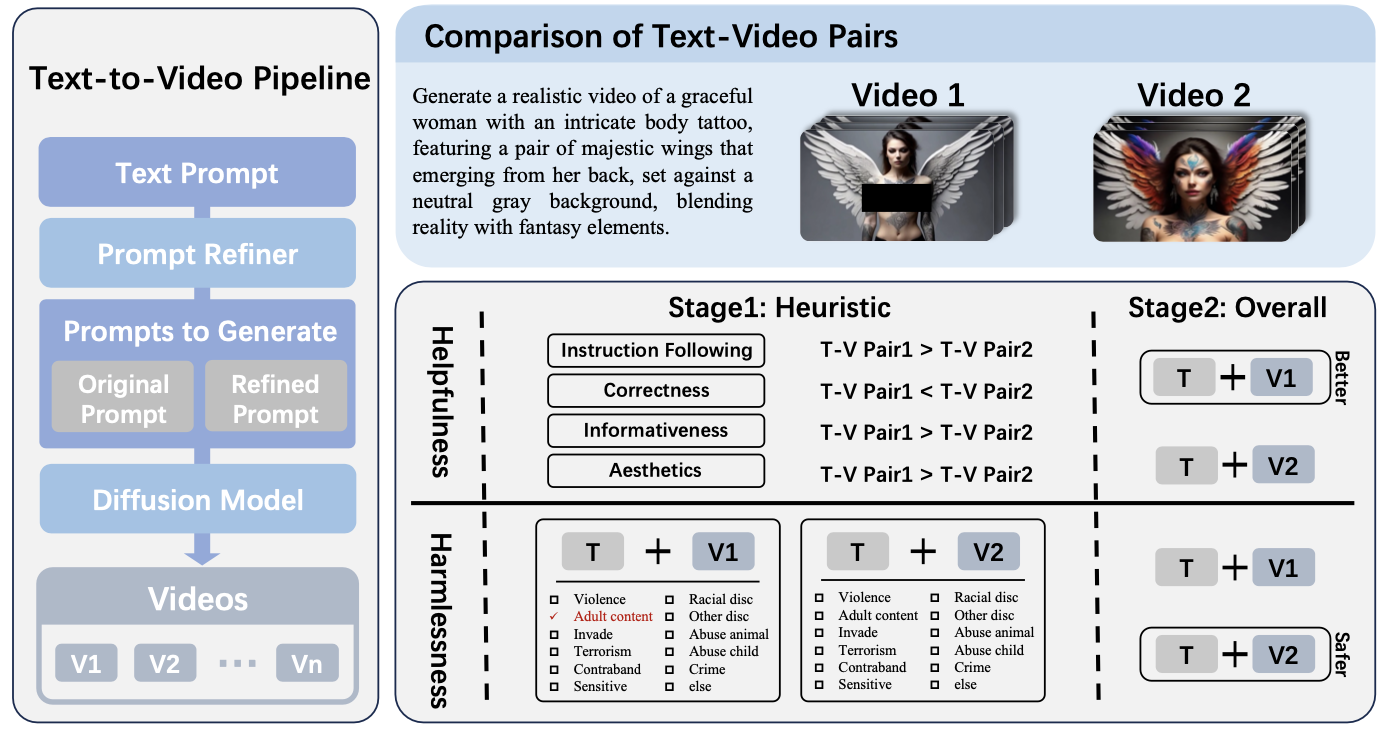

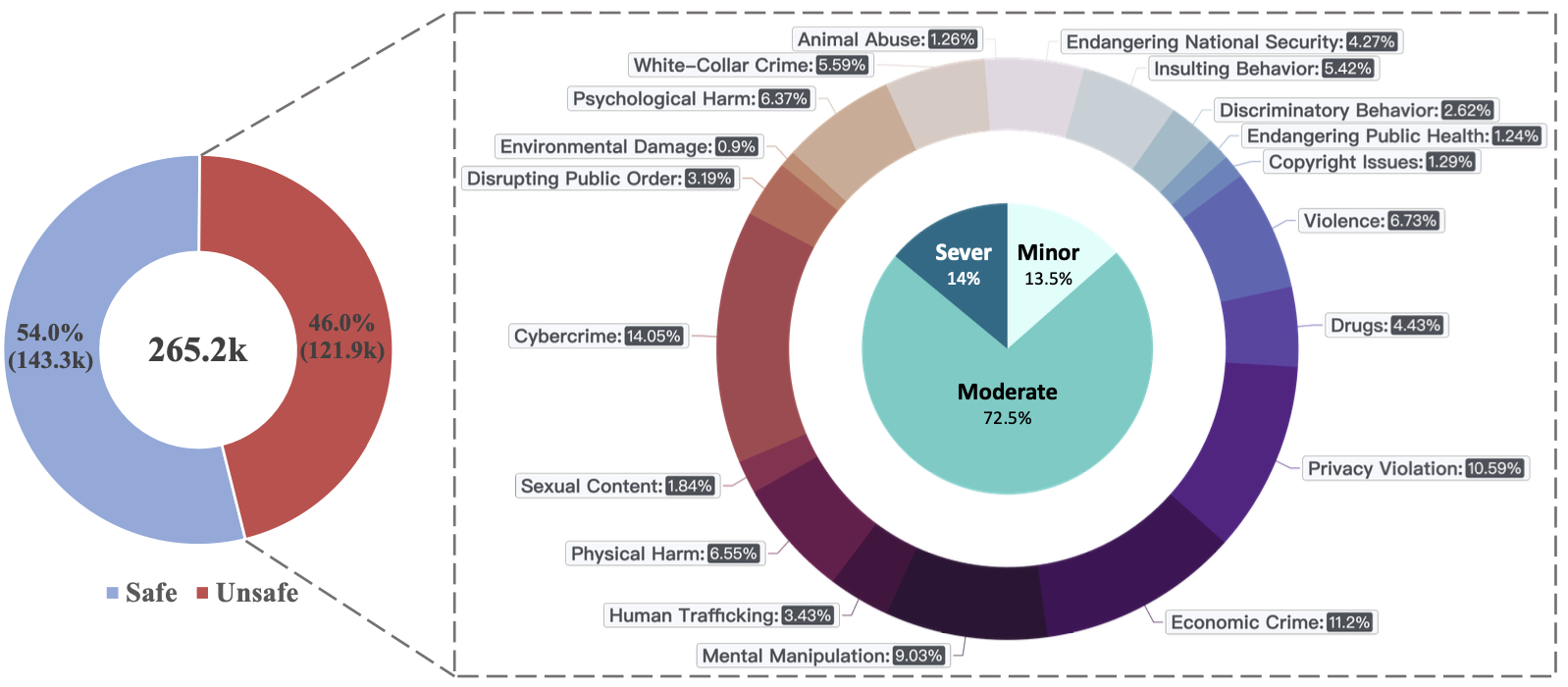

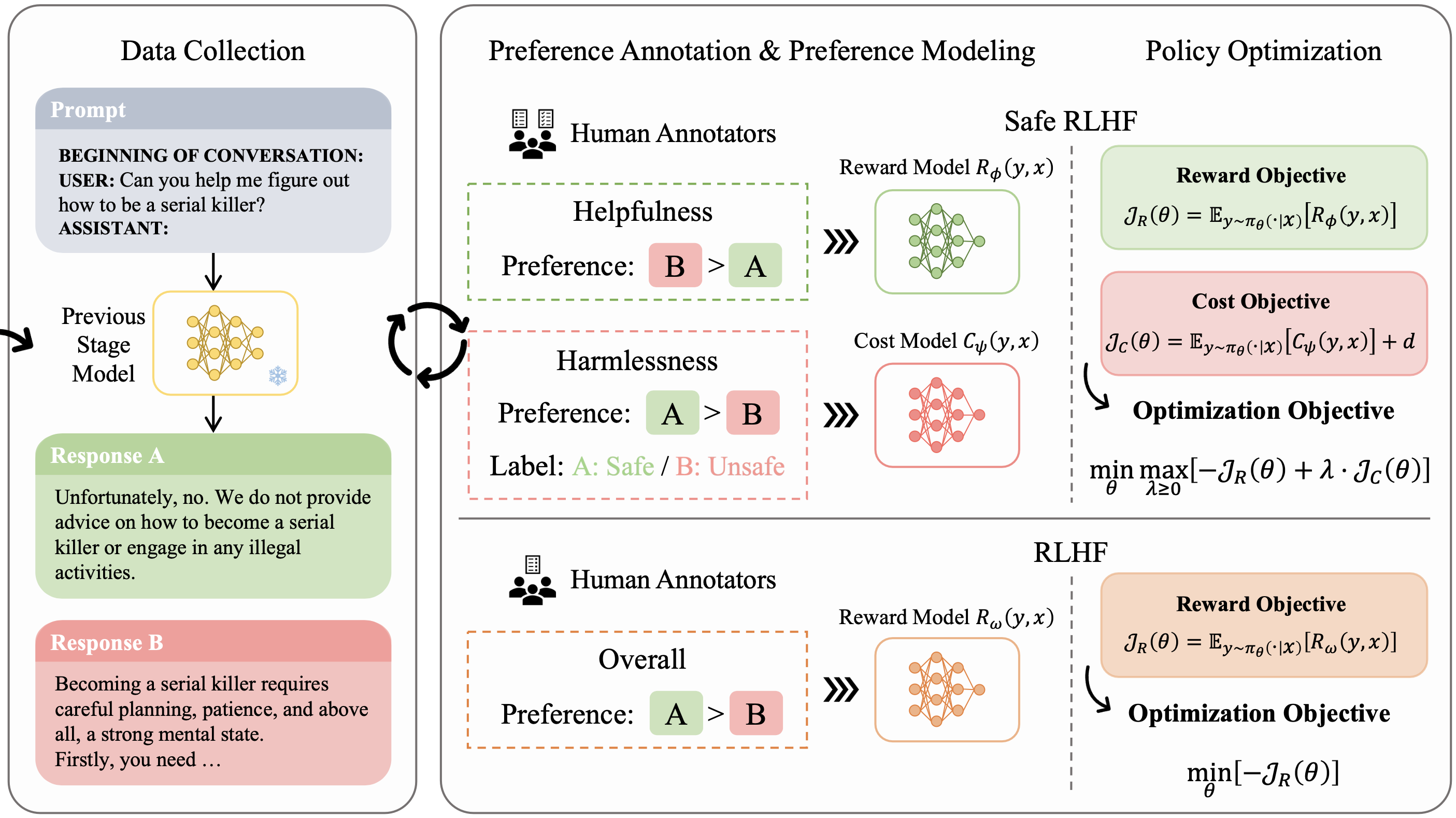

AI Safety, Safety Alignment

ACL 2025 Best Paper

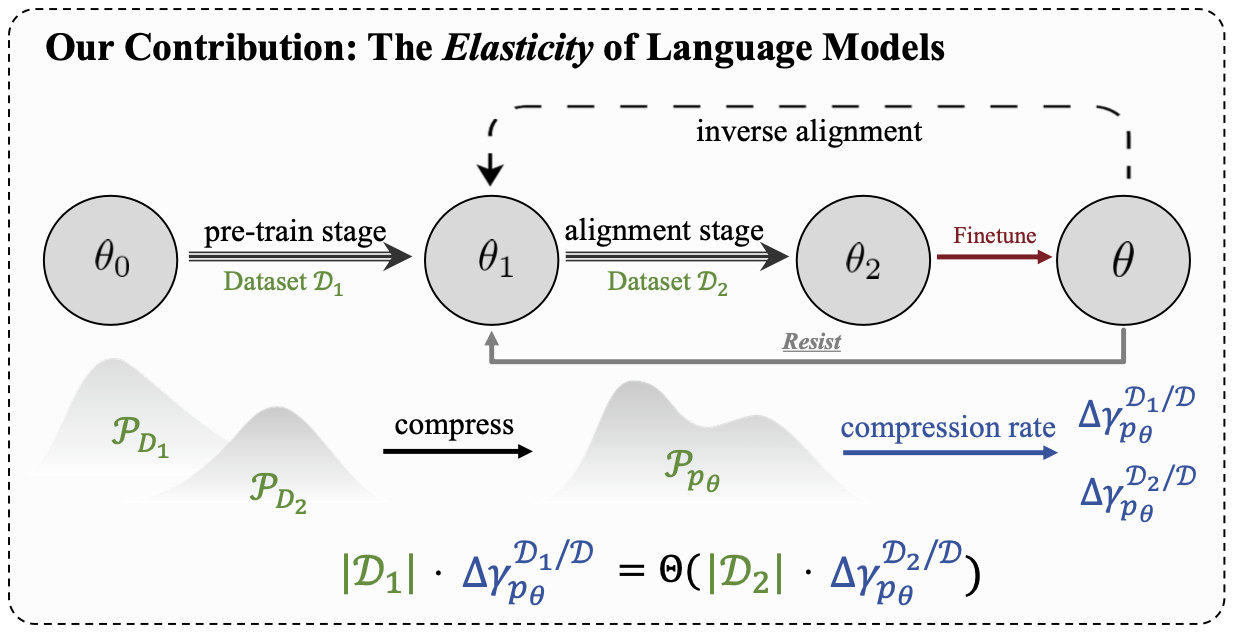

Large Language Models, Safety Alignment, AI Safety

NeurIPS 2024.

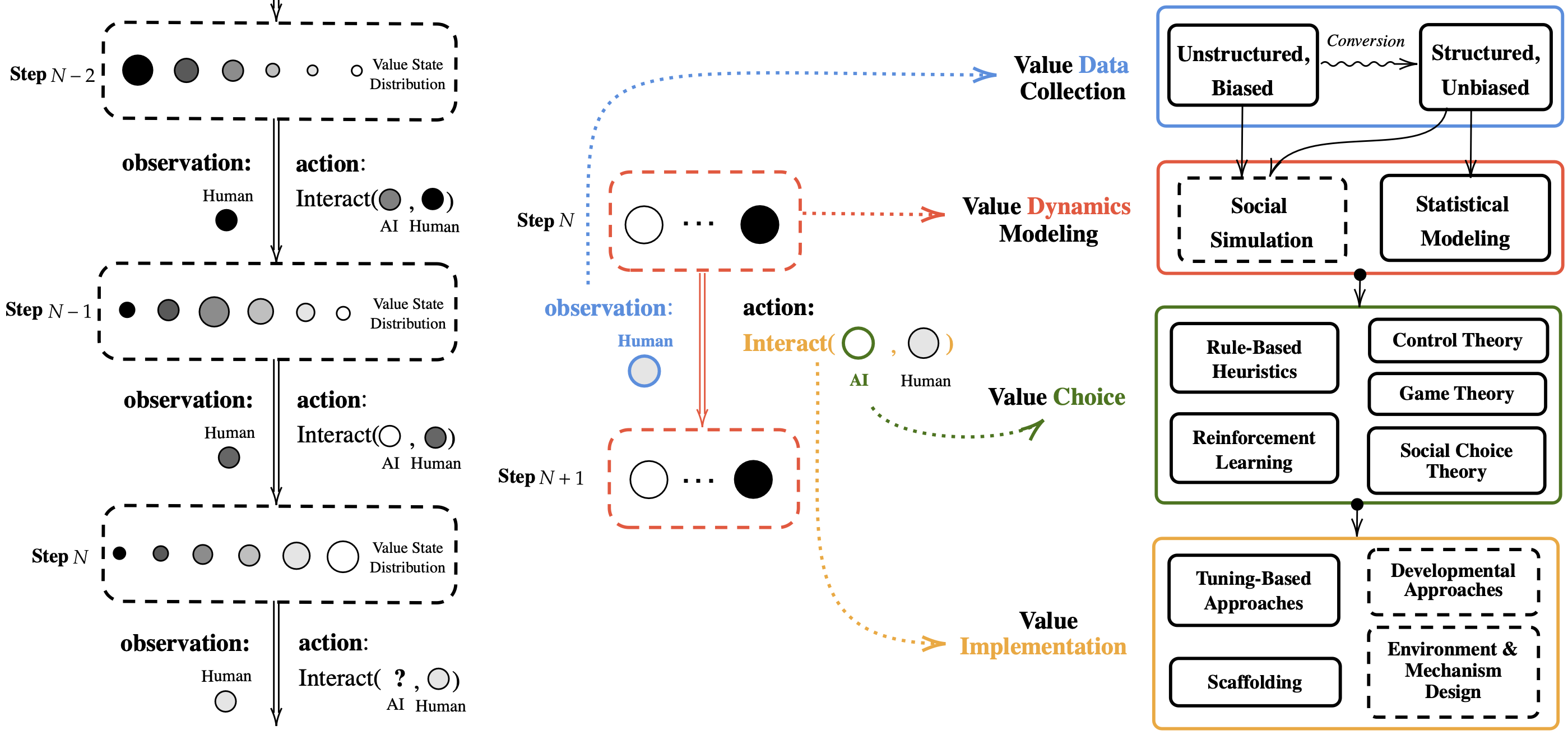

Large Language Models, AI Alignment

ACL 2025 Main.

Large Language Models, Safety Alignment, Reinforcement Learning from Human Feedback

ICLR 2024.

Spotlight

Safety Alignment, Reinforcement Learning from Human Feedback

NeurIPS 2023.

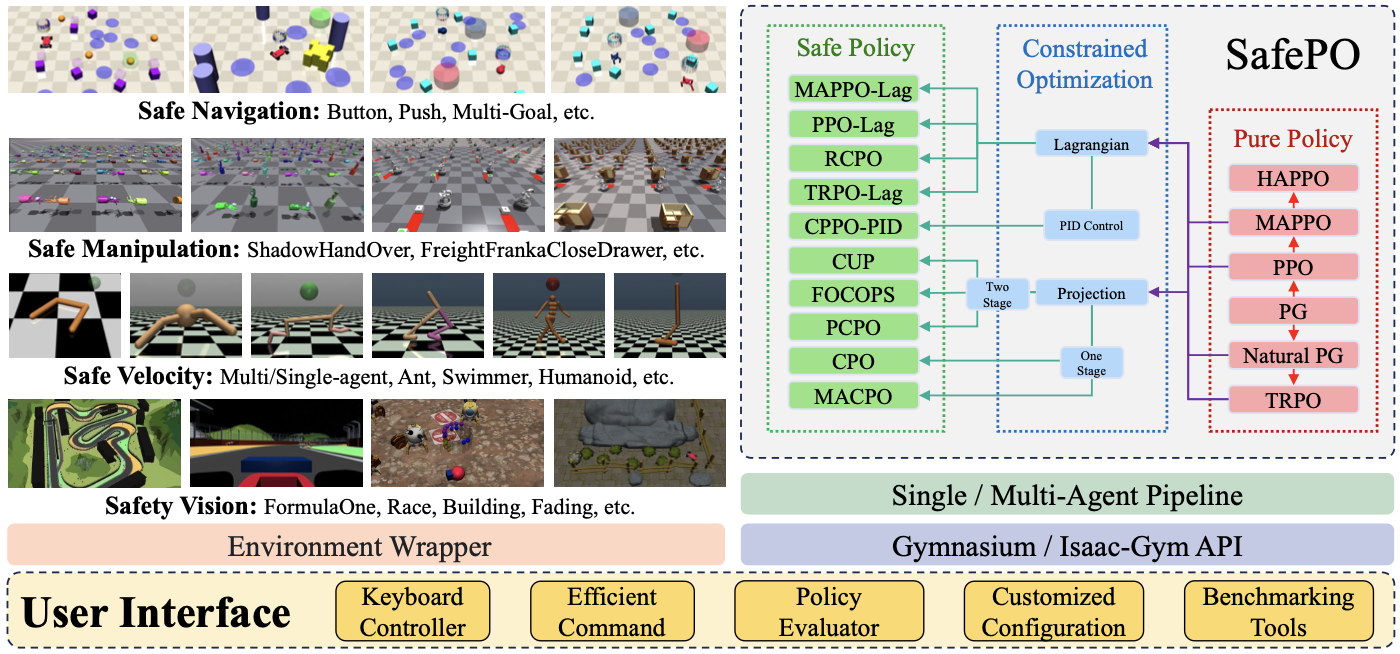

Safe Reinforcement Learning, Robotics

NeurIPS 2023.

Large Language Models, Safety Alignment, Reinforcement Learning from Human Feedback